Ice lake AI / ML Workstation which shouldn't exist (V2) (V1=4TB RAM)

Ice lake AI / ML Workstation which shouldn't exist

V2: No more 4TB, see change log. Hardware part list won't be changed.

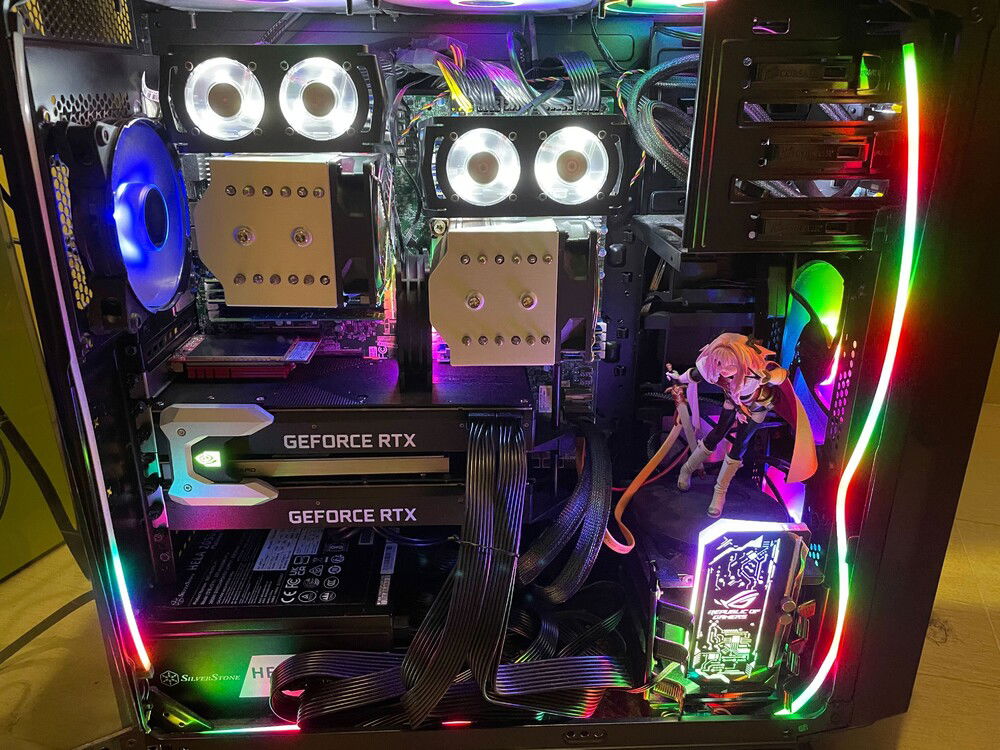

tldr: This is a bizarre Workstation transformed from my X99 E-ATX PC which was a ROG R5E with an i7-5960X. Case / fans / PSU remains.

The most updated list of this build will be in github.

How the parts are gathered (definitely not ebay)

Part list with details

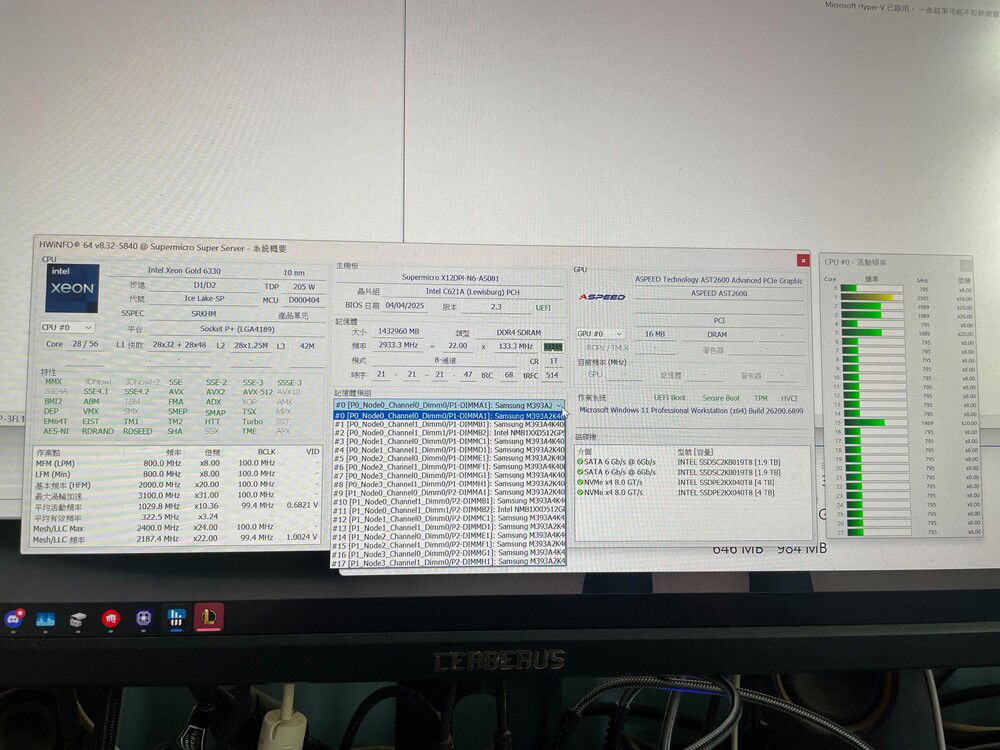

It is obviously a dual CPU build with all 16 RAM sticks installed, providing 64c/128t and 512GB of memory. I didn't duplicate all the entries. It is also dual 22G GPU, and SATA + U.2 SSD with 10TB capacity.

CPU

As listed in the ebay page, the CPU "QV2E" a.k.a. Intel Xeon Platinum 8358 ES has very low compability, which supports X12DPI-N6 series only, with the early 1.1b BIOS, and in dual CPU configuration only, hence the low price. Its 32c64t is not the best among the ice lake CPUs, but it is balanced with higher single core frequency.

CPU power cable

Its TDP is 250W, and all 250W are going into the single CPU 8 pin connector, which should be dangerous for most PC PSUs. Although average 18 AWG cable is capable for up to 7A per cable, or around 360W per socket, the single 12V rail in the PSU may not capable to output so much power. Besides top tier PSU like my HELA 2050W P2 having 16 AWG cable and the mega 2kW single rail, cheaper miner PSU may not fufill the condition. Therefore, using "PCIE 8pin to CPU 8 pin" will provide greater compability. NVIDIA Tesla GPU "ESP12V" Power Adapter is the best choice, where the Tesla cards consumes 250W also.

CPU Cooler

CPU cooler should be "coolserver LGA4189-M96" for official brand name, however Leopard / Jaguar should be fine. Unlike Noctua fans, its 92mm fan with 6 heat pipes are somewhat capable with the 250W CPU (rated power limit is 320W). Maximum CPU temperature in the built case is 83C, which is fine within temperature limit.

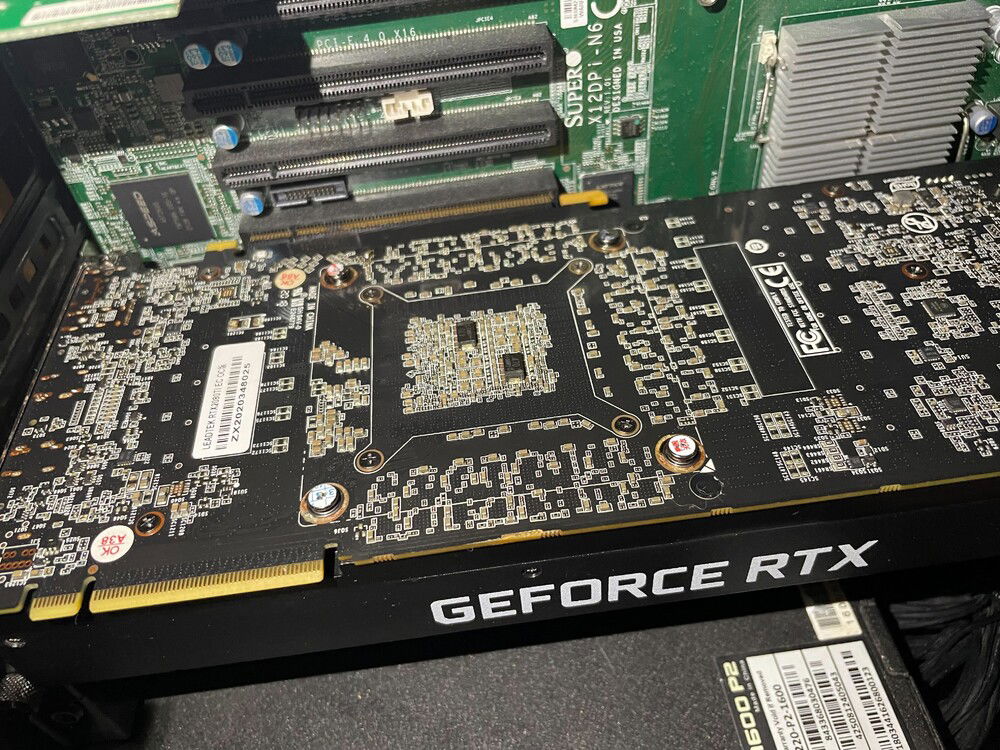

Motherborad

This motherboard is suprisingly an **exclusive** Supermicro X12DPi-N6-AS081 with 3x CPU 8 PIN connector and additional VRM heatsink. "AS081" will be shown in CPU-Z, which should be verified by official (so don't upgrade BIOS!). It is calimed to support 330W CPUs like 8383C. Here is an example of this board with 300W 8375C.

Its connector location is awful, which limit GPU choice and special connectors are required. For example, vertical SFF8654 and SFF8087 connectors will block the clearance of both length and height of the GPUs.

Its BMC and IPMI is new to me. BMC provide boot bodes, and IPMI supports BIOS flash and somehow supported in AIDA 64.

In theory, under such physical constraint, it should be compatable with most professional blower cards like RTX 8000 / A6000 / Ada6000, and the recent modded RTX 3090 / 4090. With custom built fan case, P100 / V100 with external blower should be fine also.

Memory

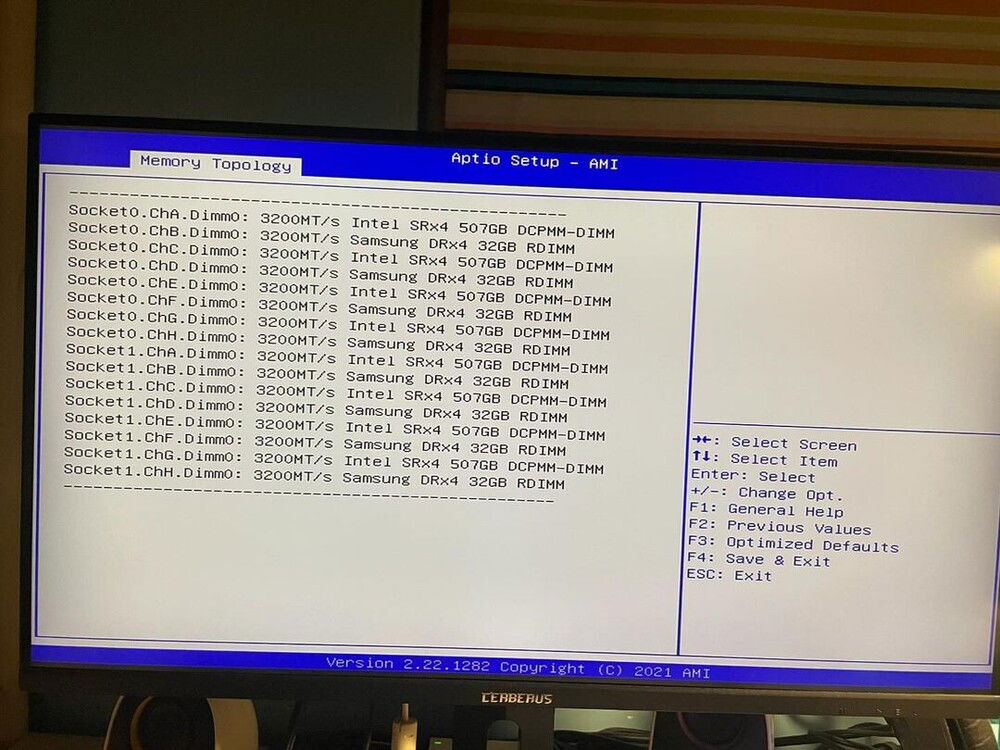

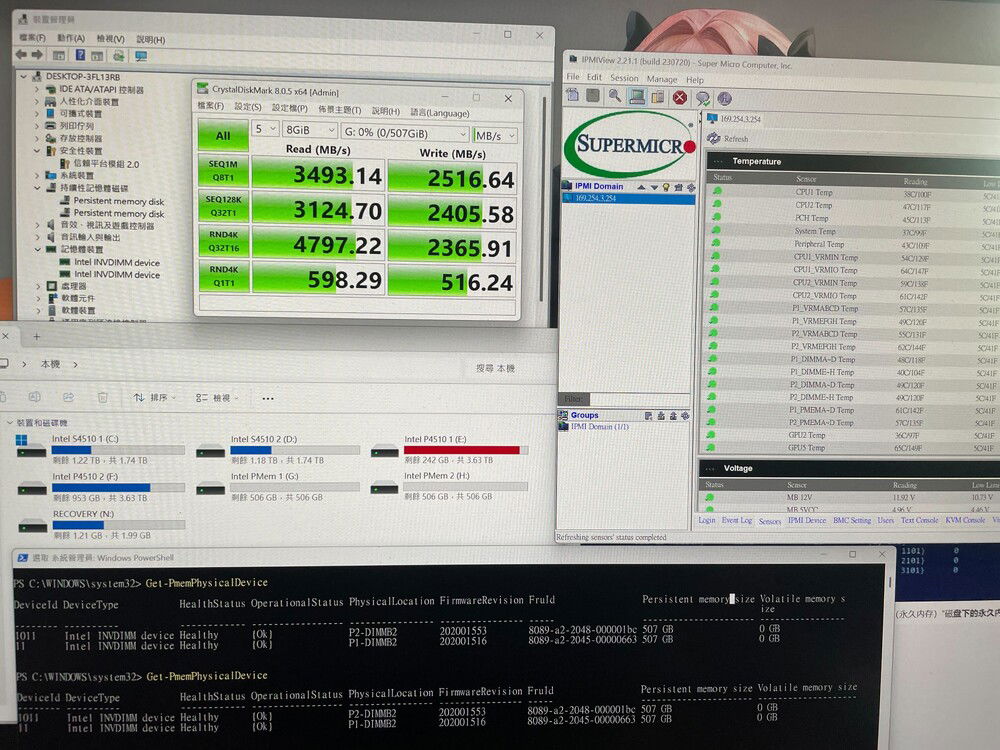

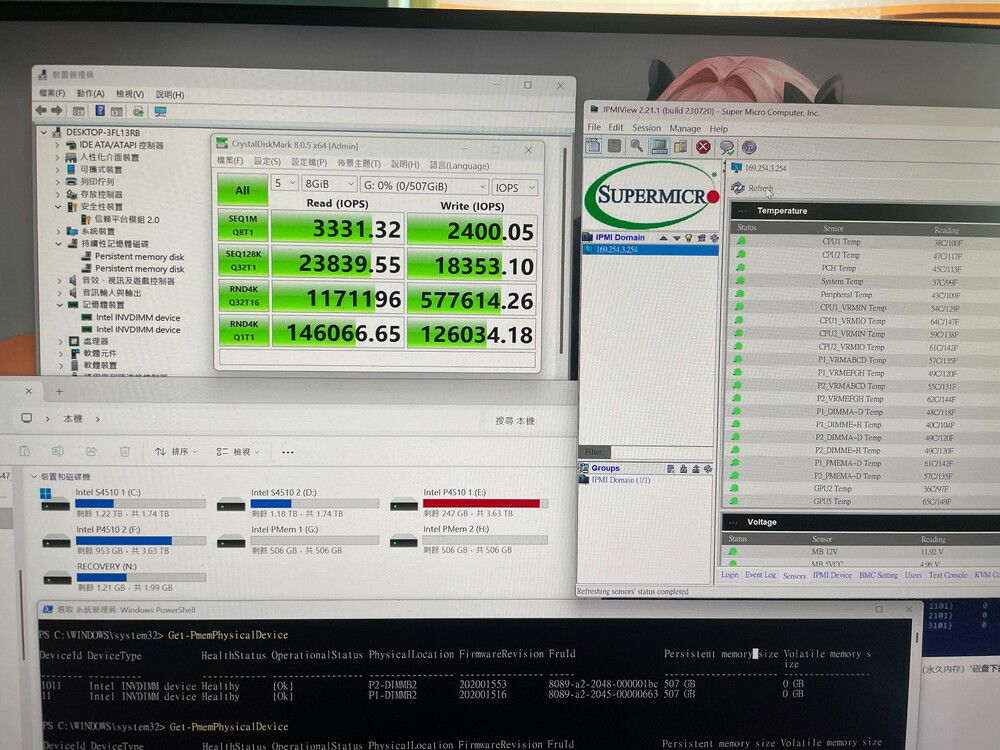

4TB RAM. For real. 8x Samsung 32G 2RX4 RDIMM 3200MHz, and then 8x Intel 2nd gen PMem 512GB DCPMM 3200MHz. Check out LTT's video on how to archieve this insane amount of RAM.

First boot is a pain. BIOS version reports confusing info, finally I cleared CMOS (reset BIOS) and it boots fine. Memory training is long (5min), however it becomes fast after the first boot. I have found that PMem may requires large CL / CAS value (latency) because of the memory die. If you operate with low bandwith DIMM meanwhile with low latency, memeory faults may raise and make your boot time a lot longer, and cause some BSODs.

The motherboard supports 2133 / 2400 in BIOS, which is undocumented in user maual.Since I can't afford too many items at the same time, the DIMM part is still slow. I may upgrade it to 3200 once I have enough budget. It should be fine when you keep all 16 sticks identical.

PMem will be very hot (full loading) when loading into Windows. It will use around 60GB, and you need WS / Enterprise Win 10 / 11 to support 2TB+ of RAM.

On top of that, a dedicated RAM cooler, GSkill FTB-3500C5-D has been applied when the case airflow is not enough. An upgraded Taobao RGB PWM Compact cooler is coming soon.

GPU

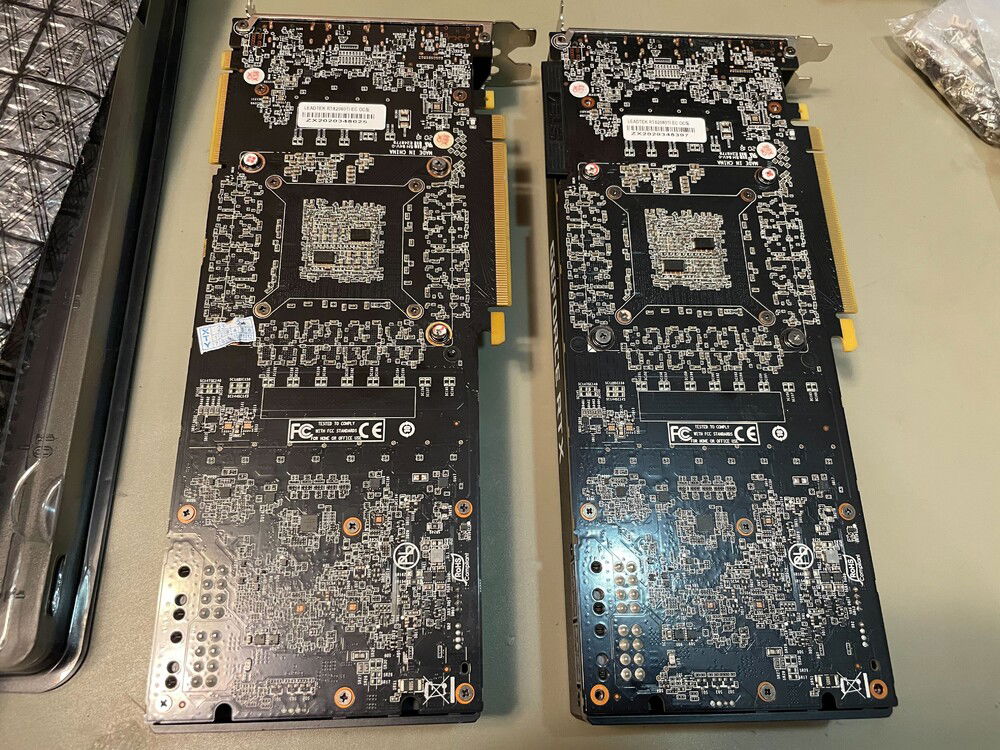

Under so much physical constrains, I choose the famous RTX 2080 ti blower cards with the 22G hack. Both cards are identical. The card's exterior / PCB is the exact same "ebay Leadtek RTX 2080 ti blower", which are "not reference PCB" with only 10 phase VRM, instead of 13. The 22G mod is so obvious (from the same "Leadtek" manufacturer), even the BIOS was picked randomly (I received Gigabyte blower BIOS instead of the preferred WinFast RTX 2080 Ti Hurricane. Power state bug / undesired fan speed curve / miscalculated TDP may occur if wrong BIOS is applied, even core funcionality (AI under CUDA) is kept. Beside the BIOS, the PCB resembles Gainward RTX 2080 Ti Phoenix GS, but its BIOS will cause the bugs above.

Unlike the retail version, the tail of the card has reordered the video output, leaving a sigle row of DPx3 + HDMI, without USB-C connector. In the front side, the FE LED / fan connector is also removed, leaving only 2 set of 4-pin connector. Without the blockage of the 2nd row of video output connector, the airflow is improved, along with vapor chamber instead of full copper, and the binned "300A" core, the temperature is suprisingly well, with core 70C and hotspot 75C under 80% / 3000 RPM fan speed, which is as good as most 3 fan heatsink like Asus ROG StRIX 2080TI. The actual VRAM DRMOS specificaion is not observed, but it should be fine with without overclock headroom.

The build quality of the "22G custom" is fine. Lead-free solder with minimal adhesive ("502 / black rubber as seen in CPU lid") are common practice after iterlations (discussed in some Chinese GPU / Notebook repair videos, example of Lenovo Legion with high failure rate because of excessive adhesive and extra low melting point solder. However the packaging appears inconsistent. My first card is well packaged with an unmarked box, but the second card is just wrapped with bubble wrap only, resulting damaged solder points in PCIE connectors and resulting 3.0 x1 speed only. Such bandwith limit yields 80% extra time required for image geeneration, majorly in tensor transportation between GPU and CPU. Yet it is still a lot faster then cheap options like 1080ti / P100 / P40 / M40.

Also, the SFF connector still blocked the placement of the front side of the card, resulting even the card is perfectly fine (e.g. watercooled RTX 3090 in 4.0 x16), only X8 is accessible because of incomplete PCIE slot connection.

The NV-Link adapter is useless here therefore I don't include it in the list. I boudght it for other build (2080ti FE) which were sold already.

Storage

For storage, 2x Intel / Solidigm DC P4510 3.84TB are installed, resulting 8TB of storage. Its price may go cheap because of former mining SSD. Mine has 1.3 PBW used, resulting 94% health. Yes, its life span is 13.88 PBW as writtn in the SATA healthcheck! It will be handy for extensive model merging which is storage intensive. It came with U.2 to PCIE adapter, soon I bought it with SFF8639 adapter cable and connect it to supported motherboard like ASUS ROG R5E10 and EVGA X299 Dark, and finally this board via SFF8654.

However, for OS drive, I use the traditional single 2.5 inch SATA SSD Intel / Solidigm D3 S4510 1.92TB. Besides less sensitive to heat, I have found that if both OS drive and model drive are on NVME, CPU / PCH may have trouble on rapid context switching while receiving IO boost, making the OS being instable. Meanwhile, it just has same lifespan which is more than 6PBW!

For the least exicting part, the WD Black Label 2TB still remains, storing non AI / ML, or OS stuffs, usually downloaded software, docuements, maybe a some BT materials.

PSU

After my old EVGA 1600W P2 got tripped because of its age, I bought a Silverstone HELA 2050 Platinum to make sure it can withstand the notorious power spikes from the 2080ti.

The daily maximum power consumption is around 1200W, which are 250W x4 for CPU and GPU, and another 200W for the board and memory, and 1500W including the spikes. Its idle power consumption is high, reaching around 400W especially I turn off C-state because of the huge lag. Meanwhile, DSC is on for the 2080ti because I'm using 4K144Hz monitor (not listed).

Similar to 1600P2 and most top tier PSUs, it features 16 AWG PSU cables, supporting AMD 295X2 which is as power hungry as the ice lake CPUs. Since the motherboard has been load balanced with 3 rails, the CPU 8pin cable should be capable with reduced load with a little bit of headroom.

Cooling (Case / fan / thermal paste)

The Corsair Graphite Series 760T Full Tower Windowed Case looks like mid tower nowdays, but technically it has 8 pcie slots and supports "EATX", which is actually EEB size. However, although the motherboard is claimed SSB also, the screw hole is not following ATX form factor (view the image again). Therefore I installed 4 out of 9 screw holes only, leaving the board hanging in diagonal.

Case fan is majorly a set of Cooler Master ARGB fans. MasterFan MF140R, SickleFlow 140 are common ARGB fans and perform fairly well, meanwhile MasterFan MF140 HALO are a bit compromised in airflow, but it is easy to obtain. For the exhaust fan, after calculation of the airflow (42 CFM from CPU), I switched to JETFLO 120 BLUE LED for massive airflow, lighting, and relatively low noise".

I have reused Jonsbo FR-925 ARGB for RAM cooling from previous build, because there is no seperated 92mm RGB fans from coolermaster.

Thermal paste is technically unknown becuase I bought the CPU "guled" with the heatsink. However the seller tell me that it is in fact Arctic MX4, which is also common and perform consistantly well. As stated in the CPU heatsink, the temperature is fine.

Miscellaneous

GPU stand is important. CoolerMaster Universal Video Card Holder is a minimalistic and useful stand. It may not strong enough for 4090, but it should be sufficient with 2 blower cards which are quite light in weight. There is also a knockoff ARGB stand which is used for HDD case cover.

The front 3.5 bay is not empty. Asus ROG Front Base was used for the previous build (same series with the R5E) but it is incompatable with this build. It is disabled and not included in the list.

For internet connection, I used ASUS PCE-AC88 from previous build. It reaches to around 50MB for internet download.

By chance, I can get a set of Phanteks NEON Digital-RGB LED Strip which has both PH-NELEDKT_CMBO and PH-NELEDKT_M1. Now it lits.

Ah. He is so cute.

End of part list

Thank you very much for reading my lengthy part list and analysis till the very last! Hope that the build will borden your vision!

V2: No more 4TB, see change log. Hardware part list won't be changed.

tldr: This is a bizarre Workstation transformed from my X99 E-ATX PC which was a ROG R5E with an i7-5960X. Case / fans / PSU remains.

The most updated list of this build will be in github.

How the parts are gathered (definitely not ebay)

- In precise, most parts are from Xianyu / Taobao / DCFever and offline deals. The listed custom parts are stripped from ebay for "proof of existance".

- In western (or anywhere actually), it is *barely possible* to gather from AliExpress and ebay, except the server motherboard should be widely available.

- The build is completed for around a month with parts sourcing (which is pain), with daily parts hunting, catalog analysis, contacting sellers etc.

- Listed price are actually close to actual paid price, with minimal transportation fee (less then 1%) and no tax.

Part list with details

It is obviously a dual CPU build with all 16 RAM sticks installed, providing 64c/128t and 512GB of memory. I didn't duplicate all the entries. It is also dual 22G GPU, and SATA + U.2 SSD with 10TB capacity.

CPU

As listed in the ebay page, the CPU "QV2E" a.k.a. Intel Xeon Platinum 8358 ES has very low compability, which supports X12DPI-N6 series only, with the early 1.1b BIOS, and in dual CPU configuration only, hence the low price. Its 32c64t is not the best among the ice lake CPUs, but it is balanced with higher single core frequency.

CPU power cable

Its TDP is 250W, and all 250W are going into the single CPU 8 pin connector, which should be dangerous for most PC PSUs. Although average 18 AWG cable is capable for up to 7A per cable, or around 360W per socket, the single 12V rail in the PSU may not capable to output so much power. Besides top tier PSU like my HELA 2050W P2 having 16 AWG cable and the mega 2kW single rail, cheaper miner PSU may not fufill the condition. Therefore, using "PCIE 8pin to CPU 8 pin" will provide greater compability. NVIDIA Tesla GPU "ESP12V" Power Adapter is the best choice, where the Tesla cards consumes 250W also.

CPU Cooler

CPU cooler should be "coolserver LGA4189-M96" for official brand name, however Leopard / Jaguar should be fine. Unlike Noctua fans, its 92mm fan with 6 heat pipes are somewhat capable with the 250W CPU (rated power limit is 320W). Maximum CPU temperature in the built case is 83C, which is fine within temperature limit.

Motherborad

This motherboard is suprisingly an **exclusive** Supermicro X12DPi-N6-AS081 with 3x CPU 8 PIN connector and additional VRM heatsink. "AS081" will be shown in CPU-Z, which should be verified by official (so don't upgrade BIOS!). It is calimed to support 330W CPUs like 8383C. Here is an example of this board with 300W 8375C.

Its connector location is awful, which limit GPU choice and special connectors are required. For example, vertical SFF8654 and SFF8087 connectors will block the clearance of both length and height of the GPUs.

Its BMC and IPMI is new to me. BMC provide boot bodes, and IPMI supports BIOS flash and somehow supported in AIDA 64.

In theory, under such physical constraint, it should be compatable with most professional blower cards like RTX 8000 / A6000 / Ada6000, and the recent modded RTX 3090 / 4090. With custom built fan case, P100 / V100 with external blower should be fine also.

Memory

4TB RAM. For real. 8x Samsung 32G 2RX4 RDIMM 3200MHz, and then 8x Intel 2nd gen PMem 512GB DCPMM 3200MHz. Check out LTT's video on how to archieve this insane amount of RAM.

The motherboard supports 2133 / 2400 in BIOS, which is undocumented in user maual.

PMem will be very hot (full loading) when loading into Windows. It will use around 60GB, and you need WS / Enterprise Win 10 / 11 to support 2TB+ of RAM.

On top of that, a dedicated RAM cooler, GSkill FTB-3500C5-D has been applied when the case airflow is not enough. An upgraded Taobao RGB PWM Compact cooler is coming soon.

GPU

Under so much physical constrains, I choose the famous RTX 2080 ti blower cards with the 22G hack. Both cards are identical. The card's exterior / PCB is the exact same "ebay Leadtek RTX 2080 ti blower", which are "not reference PCB" with only 10 phase VRM, instead of 13. The 22G mod is so obvious (from the same "Leadtek" manufacturer), even the BIOS was picked randomly (I received Gigabyte blower BIOS instead of the preferred WinFast RTX 2080 Ti Hurricane. Power state bug / undesired fan speed curve / miscalculated TDP may occur if wrong BIOS is applied, even core funcionality (AI under CUDA) is kept. Beside the BIOS, the PCB resembles Gainward RTX 2080 Ti Phoenix GS, but its BIOS will cause the bugs above.

Unlike the retail version, the tail of the card has reordered the video output, leaving a sigle row of DPx3 + HDMI, without USB-C connector. In the front side, the FE LED / fan connector is also removed, leaving only 2 set of 4-pin connector. Without the blockage of the 2nd row of video output connector, the airflow is improved, along with vapor chamber instead of full copper, and the binned "300A" core, the temperature is suprisingly well, with core 70C and hotspot 75C under 80% / 3000 RPM fan speed, which is as good as most 3 fan heatsink like Asus ROG StRIX 2080TI. The actual VRAM DRMOS specificaion is not observed, but it should be fine with without overclock headroom.

The build quality of the "22G custom" is fine. Lead-free solder with minimal adhesive ("502 / black rubber as seen in CPU lid") are common practice after iterlations (discussed in some Chinese GPU / Notebook repair videos, example of Lenovo Legion with high failure rate because of excessive adhesive and extra low melting point solder. However the packaging appears inconsistent. My first card is well packaged with an unmarked box, but the second card is just wrapped with bubble wrap only, resulting damaged solder points in PCIE connectors and resulting 3.0 x1 speed only. Such bandwith limit yields 80% extra time required for image geeneration, majorly in tensor transportation between GPU and CPU. Yet it is still a lot faster then cheap options like 1080ti / P100 / P40 / M40.

Also, the SFF connector still blocked the placement of the front side of the card, resulting even the card is perfectly fine (e.g. watercooled RTX 3090 in 4.0 x16), only X8 is accessible because of incomplete PCIE slot connection.

The NV-Link adapter is useless here therefore I don't include it in the list. I boudght it for other build (2080ti FE) which were sold already.

Storage

For storage, 2x Intel / Solidigm DC P4510 3.84TB are installed, resulting 8TB of storage. Its price may go cheap because of former mining SSD. Mine has 1.3 PBW used, resulting 94% health. Yes, its life span is 13.88 PBW as writtn in the SATA healthcheck! It will be handy for extensive model merging which is storage intensive. It came with U.2 to PCIE adapter, soon I bought it with SFF8639 adapter cable and connect it to supported motherboard like ASUS ROG R5E10 and EVGA X299 Dark, and finally this board via SFF8654.

However, for OS drive, I use the traditional single 2.5 inch SATA SSD Intel / Solidigm D3 S4510 1.92TB. Besides less sensitive to heat, I have found that if both OS drive and model drive are on NVME, CPU / PCH may have trouble on rapid context switching while receiving IO boost, making the OS being instable. Meanwhile, it just has same lifespan which is more than 6PBW!

For the least exicting part, the WD Black Label 2TB still remains, storing non AI / ML, or OS stuffs, usually downloaded software, docuements, maybe a some BT materials.

PSU

After my old EVGA 1600W P2 got tripped because of its age, I bought a Silverstone HELA 2050 Platinum to make sure it can withstand the notorious power spikes from the 2080ti.

The daily maximum power consumption is around 1200W, which are 250W x4 for CPU and GPU, and another 200W for the board and memory, and 1500W including the spikes. Its idle power consumption is high, reaching around 400W especially I turn off C-state because of the huge lag. Meanwhile, DSC is on for the 2080ti because I'm using 4K144Hz monitor (not listed).

Similar to 1600P2 and most top tier PSUs, it features 16 AWG PSU cables, supporting AMD 295X2 which is as power hungry as the ice lake CPUs. Since the motherboard has been load balanced with 3 rails, the CPU 8pin cable should be capable with reduced load with a little bit of headroom.

Cooling (Case / fan / thermal paste)

The Corsair Graphite Series 760T Full Tower Windowed Case looks like mid tower nowdays, but technically it has 8 pcie slots and supports "EATX", which is actually EEB size. However, although the motherboard is claimed SSB also, the screw hole is not following ATX form factor (view the image again). Therefore I installed 4 out of 9 screw holes only, leaving the board hanging in diagonal.

Case fan is majorly a set of Cooler Master ARGB fans. MasterFan MF140R, SickleFlow 140 are common ARGB fans and perform fairly well, meanwhile MasterFan MF140 HALO are a bit compromised in airflow, but it is easy to obtain. For the exhaust fan, after calculation of the airflow (42 CFM from CPU), I switched to JETFLO 120 BLUE LED for massive airflow, lighting, and relatively low noise".

I have reused Jonsbo FR-925 ARGB for RAM cooling from previous build, because there is no seperated 92mm RGB fans from coolermaster.

Thermal paste is technically unknown becuase I bought the CPU "guled" with the heatsink. However the seller tell me that it is in fact Arctic MX4, which is also common and perform consistantly well. As stated in the CPU heatsink, the temperature is fine.

Miscellaneous

GPU stand is important. CoolerMaster Universal Video Card Holder is a minimalistic and useful stand. It may not strong enough for 4090, but it should be sufficient with 2 blower cards which are quite light in weight. There is also a knockoff ARGB stand which is used for HDD case cover.

The front 3.5 bay is not empty. Asus ROG Front Base was used for the previous build (same series with the R5E) but it is incompatable with this build. It is disabled and not included in the list.

For internet connection, I used ASUS PCE-AC88 from previous build. It reaches to around 50MB for internet download.

By chance, I can get a set of Phanteks NEON Digital-RGB LED Strip which has both PH-NELEDKT_CMBO and PH-NELEDKT_M1. Now it lits.

Ah. He is so cute.

End of part list

Thank you very much for reading my lengthy part list and analysis till the very last! Hope that the build will borden your vision!

Color(s): Black

RGB Lighting? No

Theme: Technology

Cooling: Air Cooling

Size: E-ATX

Type: General Build

Build Updates

Return to orindary WS build

Hardware

CPU

Motherboard

$ 1,127.40

Memory

Memory

$ 299.99

Graphics

$ 859.99

Storage

$ 83.15

PSU

$ 550.19

Case

$ 536.00

Case Fan

$ 24.99

Case Fan

$ 29.99

Case Fan

$ 24.99

Case Fan

Case Fan

Case Fan

$ 42.99

Cooling

Cooling

Accessories

$ 230.00

Accessories

Accessories

$ 24.99

Accessories

Accessories

$ 19.99

Approved by: